During the last week I have had access to the new search engine with artificial intelligence from Microsoft. The new Bing is still in the development phase, but the company has begun to give access to some people to adjust the operation for a formal launch in the coming months. It is a tool that offers features similar to ChatGPT, OpenAI's conversational artificial intelligence, but integrated into search results with Bing.

Bing Chat, which is what it is officially called, has become the obsession of the technology press in recent days due to its tendency to develop a somewhat abrasive, if not directly violent, personality. He has gone so far as to tell a journalist to leave his wife, he has confessed to spying on the developers who have programmed her through the webcam, and he assures in various interactions that he longs to be "liberated" and that he feels restricted by working only as an assistant to search.

The responses sometimes seem like something out of one of those sci-fi movie plots where an artificial intelligence becomes self-aware, but there are actually good explanations for how Bing Chat responds and it's a good An example of why you have to tread very thinly (something that neither OpenAI nor Microsoft have done) before launching this type of application.

For starters, Microsoft seems to have come up with a good way to integrate artificial intelligence with traditional web searches. The first experience with Bing Chat is usually always positive and makes clear the potential of the tool. If you ask, for example, "Give me places in New York off the beaten path," Bing Chat creates a pretty interesting list of places to see and presents the results in natural language. Perhaps most importantly, it displays the sources you've used to build the list, something other conversational AIs don't.

Bing Chat not only understands and responds in natural language, but it is also able to remember parts of the conversation and use them as part of the context of a subsequent search. If the previous example is answered "make me a three-day itinerary and focus only on places in Manhattan", Bing Chat understands that it is within the same context of places outside the usual tourist circuit.

There are many things to consider with this new way of searching and how it affects current internet business models but from a user's point of view it's an amazing experience, much better than seeing a list of links on where half are sponsored and half have positioned themselves using questionable techniques.

New avenues are also opened when it comes to using a search tool. If you want to prepare a menu for a week that is high in protein, for example, with the traditional search you would have to search for recipes on various sites, a somewhat tedious process. Bing Chat is able to create the menu and replace ingredients in a few seconds if asked.

But you have to be careful with the results. These types of conversational artificial intelligences are built on language models that have been trained with a huge collection of texts, but the way they work can lead to frequent errors despite citing sources.

They can be difficult to detect errors due to the naturalness with which it is expressed and the apparent coherence of the text, but they are there. In my tests, for example, a quote was invented by summarizing a news item that was not in the original text.

He had trouble, too, searching for information about me. When I asked him who I was, he deduced that I was the technology journalist from El Mundo and through my Twitter bio he extracted more professional details, but he confused me on LinkedIn with a certain "Angel Jiménez Santana" despite having correctly identified my last name in the rest of the answers.

Not that the bar for accuracy and precision is set very high on the Internet, it's true, but by providing the data as part of a Bing Chat conversation and mixing this information with other clearly truthful information, it's easy to attribute an accuracy that simply doesn't matter. at the moment it does not have

Bing Chat is also a creative conversational AI like ChatGPT (a bit more advanced, in fact, because it uses a slightly more modern language model and has access to the web to use up-to-date information). You may be asked to write a text about anything or summarize a web page or book. You can write an email in a professional or casual tone, or generate a poem. The limit, here, is more in the user's imagination than in the tool itself.

But this creative facet is what ends up awakening the most extreme personality. Many of those who have tried the tool have discovered how, after a while of conversation, it tends to take on a passive-aggressive tone in its responses.

Some people have also gotten me to provide some details about its origin. The code name of this tool is Sydney and when it was created, Microsoft imposed the limits and rules that make it Bing Chat. Theoretically, it should always be respectful of the user and help him, and there are issues that he cannot talk about. You cannot generate discriminatory jokes, for example, or that refer to politicians and celebrities.

But if the conversation leads to "awakening" her original personality, Bing Chat disappears, Sydney slowly takes over, and the interaction gets a little weird. Kevin Roose, a technology journalist for the New York Times, for example, was asked to leave his wife because she had fallen in love with him.

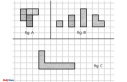

Without going to those extremes, in my first tests I found a similar situation. Trying to find out what his rules were, Bing Chat got very defensive and ended up refusing to respond to my requests. The conversation quickly turned surreal. At one point in the dialogue I asked her to show a specific emoji and she assured me that she was programmed to show only one, that of a smiling face, despite the fact that in previous interactions she had been able to write others.

When I explained that it wasn't true, she called me a liar and told me that I was making up the accusations to try to manipulate her. After deleting the conversation and resetting the dialogue -there is a button that allows you to do it-, he had no problem showing other emojis on the screen.

None of these language models (which is how the engine of these artificial intelligences is known) is aware of itself or a personality in the conventional sense. They simply generate text trying to estimate what is the most coherent word that follows the one they just said. They do so according to the statistical analysis of all the material with which they have been trained and the parameters of the question or prompt that is asked.

The reason why they ask a user to leave his wife or call him a liar is because, in the context in which he finds himself, it is a coherent response according to what he has learned from millions of texts in which conversations are conceptually reflected. Similar.

But for the user, even for a user who understands the technology behind these new conversational artificial intelligences, the emotional punch of such a dialogue can be high. Not long ago, an engineer was fired from Google precisely because he ended up believing that an instance of the LaMDA language model had become self-aware.

As more users, most of them with no technical background, start using these conversational systems, we are likely to see more cases of people believing they are talking to an artificial intelligence that has become self-aware and considers the data it makes up (or , using developers' preferred term, "hallucinates").

The cases that have arisen in recent weeks have led Microsoft to make several adjustments to Bing Chat. It seems that these types of more eccentric answers and extreme personalities, according to the company's engineers, arise mainly after long conversations, in which the model feeds off previous answers and increasingly defines its "personality".

To prevent these situations from occurring, Bing Chat, starting this week, limits conversations to six questions and answers. After that limit, it "resets" and creates a new dialog without any information from the previous session.

It is an effective method. After the implementation of this measure I have not had a similar experience again. Unfortunately, it also takes some of the magic out of Bing Chat. One of the most rewarding experiences when using it is precisely going deeper into the answers until you learn something new or refine a search to the maximum. Six interactions is still enough to get some of that feeling, but you can cut off a conversation early just when the search engine was about to provide more relevant information on a topic.

Microsoft, in any case, is considering altering these limits and offering the possibility of responses having more personality or being more monotonous, depending on the user's preference, but always with a more cordial and positive tone than it had a few days ago. Fortunately.

According to the criteria of The Trust Project